GPU TPS Performance Comparison

This blogpost provides the workflow and the associated Python codes to check which GPU machine is faster if you have multiple GPU machines, for a given database. We have tested this workflow with ~900 scientific papers in the form of PDF file format totaling 2GB in size. If you have limited computational resources than what is needed for your database, then we recommend lowering it by picking the sample set database that best represents the actual data. This workflow takes in a database containing scientific papers in PDF format and gives you the detail comparison in the form of Token Per Second (TPS) number on individual file basis across both GPU machine along with final percentage representing how many of the total input files ran faster on one given GPU machine than the other GPU machine. So far, this workflow is designed for comparison of 2 GPU machines. This workflow contains three sequential steps.

Step 1 – Preprocessing the database.

Before running language model (Step 2) on the database. We need to make sure it is free from any non-PDF format files and there are no nested directories. Since we are moving files to main directory from nested directories, there could be clash between the names. To avoid such a situation “_1” will be appended to clashing file and this incident will be reported to the log file. Finally, there could be some files which are not openable, so we want to catch them before feeding them the language model in Step-2. Henceforth this step will delete those files and write the incident to the logfile. To execute all of these tasks, we have developed a Python code (1Preproc_A_onlyPDFsTOsingleDirectory.py), which is linked at bottom of this step. It performs the following tasks:

- Counting File Types:

– It counts the number of files of each type present in the specified directory.

– File types are determined by their extensions (e.g., “.pdf”, “.txt”, etc.).

– It also calculates the total size of each file type in terms of gigabytes (GB).

- Moving PDF Files:

– It identifies all PDF files within the directory, regardless of case sensitivity (e.g., “.pdf”, “.PDF”, “.pDF”, etc.).

– PDF files are moved to a newly created directory named “1GB_data_pdfs” within the same directory.

– If there are multiple PDF files with the same name, it renames them to avoid conflicts.

– It checks if each PDF file is openable. If a file cannot be opened for any reason, it is deleted, and the error is logged in a file named “1GB_data_pdfs_LOG.txt”.

- Logging:

– It logs all actions and errors encountered during the process, including file renaming and deletion due to inability to open.

- Output:

– After processing, it prints a summary of the count and size of each file type, as well as the total number of files processed.

– Additionally, it prints the total number of PDF files transferred and their cumulative size in terms of gigabytes.

Usage:

- Set the directory path (directory_path) to the location containing the files to be processed.

- Run the script, and it will organize the PDF files within the directory and provide a summary of the process.

Dependencies: The script requires the os, Counter, shutil, and logging modules from the Python standard library.

Following link points to this Python code:

https://github.com/JayKayNJIT/InnovisionLLC/blob/845b664f0fa79c28359651d3e142ece761bb683b/1Preproc_A_onlyPDFsTOsingleDirectory.py

Output of this code shall be a directory titled “’1GB_data_pdfs” created in same directory holding input directory “1GB_data”. The resulting directory will be free from non-pdf files and contains only files. Furthermore, this code will print the database stats on the terminal.

Step 2 – Running LLM on database files.

This step take care of feeding all PDF files, one by one, to the language model and print the results to a output file “title.txt” in working directory. Please create three directories “library_in”, “library_out” and “read”. Whereas “read” shall contains all the database you want to process i.e. output of Step 1, and “library_in” and “library_out” shall be left empty. Code will take one PDF file, at a time, out of “read” into “library_in” before processing it with language model. Finally, it will move that file out of “library_in” into “library_out” and it keeps on repeating this cycle until “read” directory becomes empty. By the end of the code, all of the database files will move from “read” to “library_out”. This step may take multiple days depending on your database size and language model. The payload output of this step will be a file “title.txt” generated in the same directory hosting “read” and this file is needed for the next step. You need to execute this step two times i.e. one time on first GPU machine and one time on second GPU machine, which will cumulatively result in two “title.txt” files. You can append GPU names to these files as you need to put both files in same directory in next step so their names can’t be same. In our case, we changed their names by appending GPU info to them i.e. title3060.txt, title3090_Ti.txt. Currently this script is designed for Llama-2 7B Q4 language model using General Text Embeddings (GTE) model.

Github link to the Python code:

https://github.com/JayKayNJIT/InnovisionLLC/blob/719389bbef265e3c89be7a01b088b6603c6ae04a/2_LLM_Run.py

Step 3 – Postprocessing the output files from Step 2.

Finally, after finishing the most computationally expensive step of the whole workflow, we have all the information that we need to process the payload-numbers and see how our GPU machines performed in terms of timing i.e. tokens per second. This step is divided into two sub steps i.e. Step 3A, Step 3B.

Step 3A – Extracting useful information from Step-2’s payload-output file and calculate TPS.

This step extracts useful information that we need to calculate and compare the TPS for a given file across given two GPU machines. Just like the previous step, we need to run this Python code (3Postproc_A_extractingNumbersFromOutputFiles.py) separately for each of the “title.txt” file produced in Step-2. This code will generate a new text file having the title of the input file name appended by “_2”. Once you finish running this code for both output files generated in Step-2, you will have two newly generated files in same directory holding the input files e.g. for our test case title3060_2.txt, title3090_Ti_2.txt. Once you have these two files, we will move to the next and last step. To execute all of these tasks, we have developed a Python code (3Postproc_A_extractingNumbersFromOutputFiles.py), which is linked at bottom of this step. It performs the following tasks:

- Remove metadata blocks that contain no useful information, identified by lines starting with “Empty Response” and containing no additional text. Additionally, delete 3 lines before and 2 lines after each “Empty Response” block.

- Remove lines starting with “Counter: Currently processing”.

- Remove text chunks between “Response:” and “Stderr Output:”.

- Delete the first four lines out of every five consecutive lines starting with “llama_print_timings:”.

- Delete every line that starts with “Llama.generate:”.

- Remove empty lines.

- Process lines starting with “llama_print_timings:” by:

- Extracting two floating values from each line.

- Calculating the TPS (Transactions Per Second) value using the extracted values.

- Replacing the line with the first floating value prefixed with “Time in ms:”.

- Inserting the second floating value prefixed with “Tokens:” and the calculated TPS value prefixed with “TPS:” on subsequent lines.

- Adding an empty line after every line that starts with “TPS:”.

The script first cleans up the input metadata file by removing unnecessary blocks and lines. It then processes the remaining data to extract relevant information related to processing PDF files. The cleaned data is then saved to a new file with “_2” appended to the original file name.

Usage:

- Replace the value of the variable “input_file_path” with the path to the input metadata file.

- Run the script.

- The cleaned file will be saved with “_2” appended to the original file name in the same directory as the input file.

Note:

Ensure that the Python environment has access to the input file and has the necessary permissions to read from and write to files in the specified directory.

Github link to the Python code:

https://github.com/JayKayNJIT/InnovisionLLC/blob/7dbfe5465b9801eca51e108ef3110dcfebd74957/3Postproc_A_extractingNumbersFromOutputFiles.py

Step3B-Comparing TPS for all files across both GPU machines.

This step takes in two files which are generated in Step3A and generates one payload file(comparison.txt) and one log file(comparison_log.txt). This step compares metadata blocks between two input files, extracting timing information and identifying any discrepancies. It is designed to process files containing metadata blocks representing PDF files and their corresponding timing data. This code needs the following dependencies: Python 3.x, Regular expressions module (re). To execute all these tasks, we have developed a Python code (3Postproc_B_timingComparison.py), which is linked at bottom of this step. It performs the following tasks:

- Remove Empty Lines: Before comparison, the script removes any empty lines from the input files to ensure accurate processing.

- Extract Timing Information: It extracts timing information from the fourth line of each metadata block in both input files. This information represents the time associated with each PDF file.

- Comparison: For each metadata block in the first input file, the script searches for a matching block in the second input file based on the PDF file name. If a match is found, it calculates the difference in timing between the two files and stores this information.

- Logging Mismatches: If a match cannot be found for a metadata block in the second input file, the script logs this discrepancy to a separate log file named comparison_log.txt.

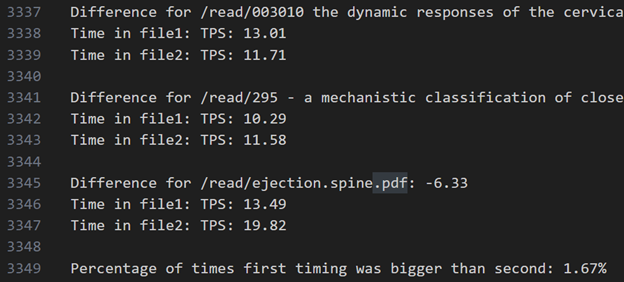

- Output to File: Instead of printing to the terminal, the comparison results, including timing differences and actual timing numbers, are written to a file named comparison.txt.

- Percentage Calculation: At the end of the comparison, the script calculates the percentage of times the timing in the first file was larger than the timing in the second file (larger the better for TPS) and appends this information to the output file.

Usage:

- Ensure that the input files contain metadata blocks representing PDF files and their timing information.

- Run the script, providing the file paths for both input files.

- Review the output file comparison.txt for detailed comparison results, including timing differences and percentage of larger timings.

- Check the log file comparison_log.txt for any mismatches or errors encountered during the comparison process.

Github link to the Python code:

https://github.com/JayKayNJIT/InnovisionLLC/blob/bf6586f50eb1dba648fe874a03ea6f6f4d709307/3Postproc_B_timingComparison.py

After running last step, this is how our final payload file (comparison.txt) look like: